We’re already at the dawn of the age of killer robot warfare, and none of us are prepared to face its consequences. AI activists and researchers understand the danger that comes with giving a machine complete autonomy to either kill or preserve a life, which is why they have banded together to boycott a university in South Korea where an Artificial Intelligence lab has joined hands with a public defense company to develop an army of killer robots.

But South Korea is the only country that has openly admitted to using AI for developing autonomous weapons, who knows how many others have started building their killer robot armies behind closed doors. Could Elon Musk’s worst nightmare about AI bringing the Earth to the brink of destruction finally be coming true?

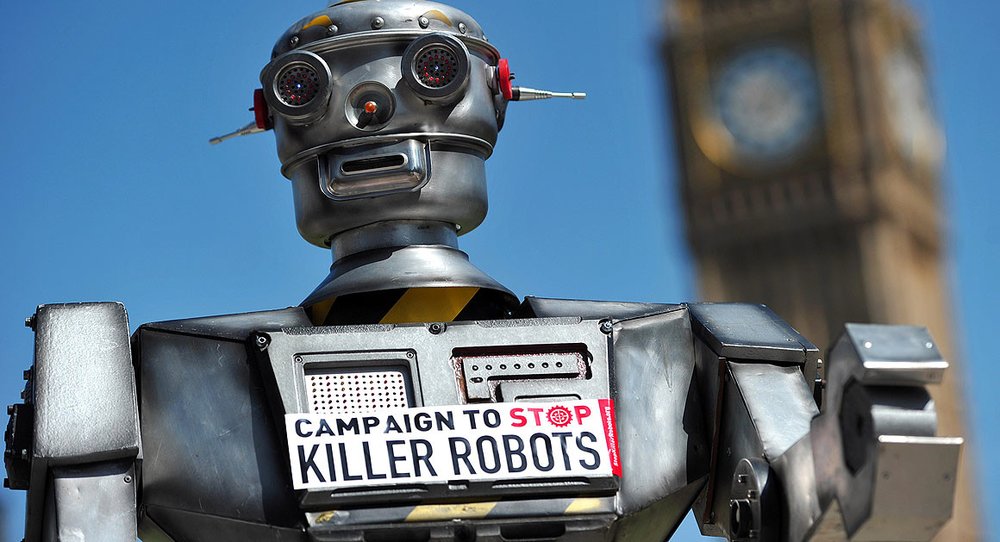

Researchers and activists are increasingly worried about the use of artificial intelligence is creating autonomous weapons and robot armies

Researchers Boycott South Korean University

More than 50 renowned researchers from 30 different countries unanimously called for a boycott against Korea Advanced Institute of Science and Technology (KAIST) for its new joint project with Hanwha Systems, a leading Korean weapons manufacturing company, which is in clear violation of ethics in the world of Artificial Intelligence.

In an open letter protesting the development of killer robots in South Korean science labs, the researchers said that they were cutting off all ties with the university until they rescind their dangerous project of developing autonomous weapons and vow to use AI only for the good of mankind. The protesting researchers wrote they no longer wished to collaborate with KAIST for any future research projects or host visitors from their university.

Hanwha Already Manufacturing Illegal Cluster Munition

The president of the South Korean university, Sung-Chul Shin, said in a statement that the news of the boycott has deeply saddened him and he would like to reassure the world that KIAST does not intend to participate in the development or use of lethal weapons and killing machines.

The weapon manufacturer, Hanwha, which is funding the robot project, is already making other weapons which are banned by an international convention signed by 120 countries. However, South Korea opted out of the treaty along with other nuclear-armed states like U.S., China, and Russia.

Sung-Chul Shin’s Statement

Sung-Chul Shin said that he is a great proponent of ethics and human rights which is why he will personally make sure that his university does not partake in any research activities that may bring harm to the human race. Ironically, the KAIST president’s views on Artificial Intelligence and defense technology were completely different when the research center first opened on February 20 than they are today after a world-wide boycott of his university.

At the time, Sung-Chul Shin strongly believed that his institution would lay the foundation for cutting-edge defense technology using command and decision systems based on AI and developing unmanned fighter machines. The previously-made statement has now been deleted after the research program stirred anger in AI activists around the world.

The boycott was ended after the president of KAIST reassured the researchers that his institution will not partake in the manufacturing of AI weaponry

Countries Call for a Ban on Killer Robots

The news of autonomous weapons being developed in South Korea comes only days before a UN meeting in Geneva which is aimed at finding legal and ethical solutions to the increasing threat from Artificial Intelligence. More than 20 countries have already agreed that banning the production and use of autonomous weapons has become an absolute necessity in the new age of Artificial intelligence.

There is also a lot of uncertainty surrounding the accuracy of autonomous killing machines and how effective they will be in distinguishing an ally from an enemy.

Most AI activists fear that if militaries around the world start creating their own robot armies, it could trigger a Terminator-like scenario where Artificial Intelligence completely controls the human race.

Engineers are already facing a similar problem in the self-driving cars that have the autonomy to make decisions on the road which aren’t always the right ones. For example, if a dog is crossing the road and is in the way of a high-speed autonomous car, does it swerve sharply to save the dog while jeopardizing the passenger’s safety? What if it is a child instead of a dog, or a bus full of students? Now imagine having to make these decisions in the battlefield.